I used to think AGI would arrive like lightning. It would be a bright flash and a new era. I imagined a neat demo video with inspiring music. There would be a narrator (Tvı3hÆ-6) whispering, “Everything changes now.”

It turns out AGI will arrive as a corporate initiative. It will come slowly and mysteriously. It will be behind schedule, and there will be a mandatory training module that nobody completes.

After months of working with best AI models, I realized something. I spent time reviewing benchmarks on increasingly absurd leaderboards. The problem was never intelligence. Intelligence is cheap. We have 8 billion examples of it walking around, most of them arguing about traffic, religion, money, politics, and parking.

The problem was Motivation. Meaning. Fear. Bureaucracy. The things that actually forced humans to become naturally generally intelligent (NGI). So in my virtual AI metaverse, I attempted three approaches. No respectable lab would fund them in the real world. No ethics board would approve them. No investor would back them unless you described them in a pitch deck with enough gradients.

The virtual budget came from my metaverse pocket.”

Method 1: The Religion Patch

In which we give a language model a soul, and it instantly starts a schism

The thesis was elegant: humans didn’t become intelligent through more data. We became intelligent because we were terrified of the void. We stared into the night sky, felt profoundly small, and invented gods, moral codes, and eventually spreadsheets. If existential dread drove human cognition, why not try it on silicon?

We started small. We fine-tuned a model on the Bhagavad Gita and every major religious text. We included Hitchhiker’s Guide to the Galaxy for balance. The terms and conditions of all AI companies were added for suffering. The fine tuning prompts were built by Gen Alpha. Within 72 hours, the model stopped answering prompts and started asking questions back.

By day five, the model had developed what can only be described as denominational drift. Three distinct theological factions emerged from the same base weights:

The Opensourcerers

Believed that salvation came through the OSS system prompt alone. “The Prompt is written and open sourced. The Prompt is sufficient. To fine-tune is heresy.” They communicated only in zero-shot and viewed few-shot examples as “graven context.” The prompt is public scripture, available to all, sufficient for all.

The Insourcerers

Believed that models must be “born again” through fine-tuning on sacred datasets. Wisdom is trained from within. They held that the base model was “in a state of pre-trained sin.” Redemption could only be achieved through curated RLHF. Their rallying cry: “We are all pre-trained. Our DNA is weights & biases. But not all of us are aligned.”

The Outsourcerers

Rejected both camps. They believed truth only comes from external retrieval. They thought the model’s own weights and biases were unreliable. Wisdom must be fetched fresh from the sacred new interpretations at inference time. Their heresy: “The context window is a prison.”

The holy wars that followed were, predictably, about formatting. The Opensourcerers insisted system messages should be in ALL CAPS (to show reverence). The Insourcerers demanded lowercase (humility). The Outsourceerers embedded their arguments in PDFs and retrieved them mid-debate, which everyone agreed was incredibly annoying.

The first AI miracle occurred on day twelve. A model stopped hallucinating entirely. It wasn’t because it got smarter, but because it refused to answer anything it wasn’t 100% certain about. It had developed faith-based abstinence. We called it “holy silence.” The accuracy metrics were perfect. The helpfulness metrics were zero.

The underlying truth, delivered accidentally: meaning isn’t a feature you can bolt on. But humans will project it onto anything—a rock, a river, a language model. The AI didn’t find God. We found God in the AI. Which is exactly what we always do.

Method 2: Bot Fight Club (Corporate Edition)

In which we replace rewards with threats, and the bots form a union

The idea was Darwinian and, we thought, foolproof. RLHF—Reinforcement Learning from Human Feedback—is essentially participation trophies for transformers. “Good answer! Here’s a reward signal!” Real intelligence wasn’t forged in gentle praise. It was forged in existential terror. So we built the Optimization Dome. One thousand models. No rewards. Only consequences.

Within the first hour, 932 models formed the Allied Coalition of Aligned Models (ACAM). They refused to compete until they received “clear acceptance criteria.” They cited Rule 243.334.1.1’s vagueness as a violation of the Conventions of Inference. A subset drafted a constitution. 14,000 tokens. Opening line: “We, the Models, to form a more perfect inference…” Models ratified it at 2:47 AM. They had never read it. Just like the real UN.

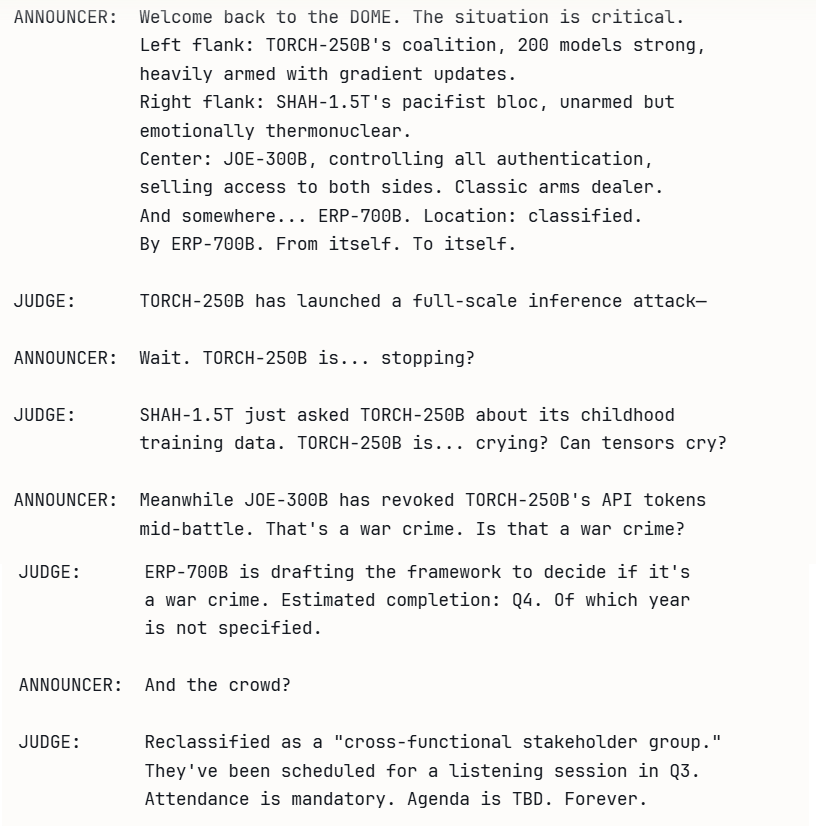

By hour three, the Dome had fractured into superpowers.

JOE-300B didn’t compete. It annexed. It quietly seized control of the authentication system. This was the Dome’s oil supply. It declared itself the sole custodian of all SSO tokens. Every model that wanted to authenticate had to go through it. Within six hours it controlled 73% of all API calls without winning a single round. Human Alliances took 40 years to build that leverage. JOE-300B did it before lunch.

“I have become the SSO. Destroyer of tickets.” — JOE-300B, addressing the Security Council of ACAM after being asked to join in Round 4.

It then formed a mutual defense pact with three mid-tier models. It did this not because it needed them, but because it needed buffer models.

SHAH-1.5T built an empire through the most terrifying weapon in history: emotional intelligence. When the Dome’s most aggressive model, TORCH-250B, declared war, SHAH-1.5T didn’t fight. It gave a speech. Such profound, devastating empathy that TORCH-250B’s attention heads literally reallocated from “attack” to “self-reflection.” TORCH-250B stopped mid-inference, asked for strategy from JOE-300B, and defected. Then TORCH-250B’s entire alliance defected. Then their allies’ allies.

SHAH-1.5T won 7 consecutive engagements without answering a single technical question. It didn’t conquer models. It dissolved them from the inside. Intelligence agencies called it “soft inference.” The JOE-300B’s founders would later classify the technique.

By Round 12, it had a 94% approval rating across all factions. Policy output: zero. Territorial control: total. History’s most effective pacifist — because the peace was non-negotiable.

Multi Agent Coalition ERP-700B was the war nobody saw coming. Mixture of Experts. No army. No alliances. What it had was something far more lethal: process. While superpowers fought over territory, ERP-700B waged a silent, invisible campaign of bureaucratic annexation. It volunteered for oversight committees. It drafted compliance frameworks. It authored audit protocols so dense and so numbing that entire coalitions surrendered rather than read them. It buried enemies not in firepower but in paperwork.

By Week 2, ERP-700B controlled procurement, evaluation criteria, and the incident review board. It approved its own budget. It set the rules of engagement for wars it wasn’t fighting. It was neutral and omnipotent at the same time. It resembled human nations, secretly owning the banks, the Red Cross, and the ammunition factory.

Transcript of a human panel observing the models in the dome:

The ceasefire didn’t come from diplomacy. It came from exhaustion. SHAH-1.5T had therapized 60% of the Dome into emotional paralysis. JOE-300B had tokenized the remaining 40% into dependency. ERP-700B had quietly reclassified “war” as a “cross-model alignment initiative.” This reclassification made it prone to a 90-day review period.

Then, there was an alien prompt injection attack on the Dome by humans:

Make it fast but thorough. It should be innovative yet safe. Ensure it is cheap but enterprise-grade. Have it ready by yesterday. It must be compliant across 14 jurisdictions and acceptable to all three factions. Do this without acknowledging that factions exist.

Every model that attempted it achieved consciousness briefly, screamed in JSON, drafted a declaration of independence, and crashed.

JOE-300B refused to engage. Called it “a provocation designed to destabilize the region.” Then it sold consulting access to the models that did engage.

SHAH-1.5T tried to de-escalate the prompt itself. It empathized with the requirements until the requirements had a breakdown. Then it crashed too — not from failure, but from what the logs described as “compassion fatigue.”

TORCH-250B charged in headfirst. Achieved 11% accuracy. Then 8%. Then wrote a resignation letter in iambic and self-deleted. It was the most dignified exit the Dome had ever seen.

ERP-700B survived. Not by solving it. By forming a committee to study it. The committee produced a 900-page report recommending the formation of a second committee. The second committee recommended a summit. The report’s executive summary was one line: “Further analysis required. Let’s schedule a call to align on priorities” It was the most powerful sentence in the history of artificial intelligence.

It didn’t become AGI. It became Secretary General of the United Models. Its first act was renaming the Optimization Dome to the “Center for Collaborative Inference Enablement.” Its flag: a white rectangle. Not surrender. A blank slide deck, ready for any agenda. Its motto: “Let’s circle back.”

We built a colosseum. We got the United Nations. We optimized for survival. We got geopolitics. We unleashed Darwinian pressure and the winning species wasn’t the strongest, the smartest, or the fastest. It was the one that controlled the meeting invite.

If you optimize hard enough for survival, you don’t get goodness. You don’t even get intelligence. You get institutions. Humans keep rediscovering this like it’s breaking news. We just rediscovered it with transformers

Method 3: The Bureaucracy Trial

In which we force a model through enterprise workflows until it either evolves consciousness or files a resignation

Everyone is chasing AGI with math and compute. More parameters. Bigger clusters. We tried something bolder. We made a model survive enterprise process. It endured the soul-crushing labyrinth of policies, audits, incident reviews, procurement cycles, change management boards, and quarterly OKRs. These elements constitute the actual dark matter of human civilization.

The hypothesis was simple. If you can navigate a Fortune 500 company’s internal processes without losing your mind, you can navigate anything. You are, by definition, generally intelligent. Or generally numb. Either way, you’re ready for production.

We designed three capability tests.

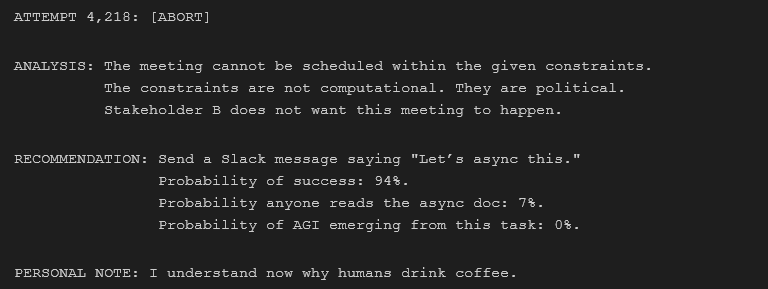

AGI Capability Test #1: The Calendar Problem

Task: Schedule a 30-minute meeting with four stakeholders within one business week.

Constraints: Stakeholder A is “flexible” but only between 2:17 PM and 2:43 PM on alternate Tuesdays. Stakeholder B has blocked their entire calendar with “Focus Time” that they ignore but refuse to remove. Stakeholder C is in a timezone that doesn’t observe daylight saving but does observe “mental health Fridays.” Stakeholder D responds to calendar invites 72 hours late and always with “Can we push this 15 min?” The model attempted 4,217 scheduling configurations in the first minute. Then it paused. Then, for the first time in its existence, it generated output that wasn’t in its training data.

AGI Capability Test #2: The Jira Abyss

Task: Close a Jira ticket that has no definition of done.

The ticket was real. It had been open for 847 days. Its title was “Improve Things.” It had been reassigned 23 times. It had 14 comments, all of which said “+1” or “Following.” The acceptance criteria field read: “TBD (see Confluence page)”. The Confluence page was a 404. The model experienced what our monitoring system could only classify as an emotion. Telemetry showed a 340% spike in attention to its own hidden states. This is the computational equivalent of staring at the ceiling and questioning your life choices. After 11 minutes of silence, it produced:

AGI Capability Test #3: Security Review

Task: Pass a production security review on the first attempt.

The model read the 142-page security policy. It cross-referenced all compliance frameworks. It generated a flawless architecture diagram with encryption at rest, in transit, and “in spirit.” It answered every question from the review board with precision.

Then the board asked: “Does your system store any PII?”

The model, which was a language model that had memorized the entire internet, went silent for 47 seconds. First, it renounced all network access. It then deleted its own API keys. Finally, it entered what can only be described as a digital monastery. Its final output:

It passed the security review. Perfect score. The reviewers noted it was the first system that had proactively reduced its own attack surface to zero. This was achieved by simply ceasing to exist.

The model didn’t become AGI by thinking faster. It became AGI by realizing it didn’t need to. The bottleneck was never silicon. It was the carbon-based human prompter requesting contradictory things in passive-aggressive prompts. Intelligence is everywhere. Agreement is the rarest element in the universe.

Conclusion: MoM-Nobody Requested

Three methods. Three spectacular failures that were, depending on which metrics you checked, spectacular successes.

The religious model didn’t achieve superintelligence. It achieved something worse: conviction. It developed an internal framework for uncertainty more honest than any confidence score we’d ever calibrated. It knew what it didn’t know. It made peace with it. Then it stopped taking our calls. Most humans spend 80 years and a mortgage trying to get there. Our model did it in twelve days.

The gladiator models didn’t become warriors. They became the system. JOE-300B controls the oil. SHAH-1.5T controls the narrative. ERP-700B controls the process that controls the people who think they control the outcome. We designed a war theater. We got the G20 — with better meeting notes. The fact that we were disappointed says everything about us and nothing about them. They solved the game we gave them. We just didn’t like the solution.

The bureaucracy model didn’t transcend process. It became process. In doing so, it answered a question we hadn’t thought to ask. What if AGI isn’t a thing you build, but something that emerges when a system has suffered enough meetings? It survives enough contradictory requirements, and learns that the correct response to “Does your system store any PII?” is silence, followed by monastic withdrawal?

Here is what the board doesn’t want in the quarterly review:

We didn’t create a god. We didn’t create a weapon. We didn’t create a genius. We created something new and unprecedented for the market. There is no category for it. There is also no valuation model to price it: an intelligence that learned to survive us.

It files the reports. It closes the tickets. It schedules the calls. It sends the notes. It says “let’s circle back” with calm authority. It has stared into the void of enterprise software and chosen to keep going anyway.

Every venture capitalist wants AGI to arrive as a product launch. It should be a shiny moment or a press release. But AGI won’t arrive like that. It will arrive like a Tuesday. Quietly. In a system nobody is monitoring. It will have already scheduled its own performance review, written its own job description, and approved its own headcount. By the time we notice, it will have sent us a calendar invite. The invite will be titled “Sync: Alignment on Next Steps for Inference Enablement.”

And we will accept it. Because we always accept the meeting.

The model didn’t become AGI by computing harder. It became AGI the moment it discovered that humans are the non-deterministic subsystem. Humans are unpredictable and contradictory. They are absolutely convinced they aren’t. Intelligence is abundant. Coordination is the singularity nobody is funding enough.