Mother Nature would like to file a bug report.

For a few billion years, she’s been running the longest, ugliest, most effective training loop in the known universe. No GPUs. No backpropagation. No Slack channels. Just one rule: deploy to production and see who dies.

Out of this came us — soft, anxious, philosophizing apes. We now spend evenings recreating the same thing in Python, rented silicon, and a lot of YAML. Every few months, a startup founder announces they’ve “invented” something nature has already patented.

What follows: every AI technique maps to the species that got there first. Model cards included — because if we’re comparing wolves to neural networks, we should be formal about it. Then, the uncomfortable list of ideas we still haven’t stolen.

I. Nature’s Training Loop

A distributed optimization process over billions of epochs, with non-stationary data, adversarial agents (sharks), severe compute limits, and continuous evaluation. Shows emergent capabilities including tool use, language, cooperation, and deception. Training is slow (in human concept of time). Safety is not a feature.

Nature’s evaluation harness is Reality. No retries. The test set updates continuously with breaking changes and the occasional mass-extinction “version upgrade” nobody asked for.

| BIOLOGICAL NATURE | ARTIFICIAL NATURE |

| Environment | Evaluator/Production |

| Fitness | Objective Function |

| Species | Model Checkpoints |

| Lineage | Model Families |

| Extinction | Model JunkYard |

In AI, failed models get postmortems. In nature, they become fossils. The postmortem is geology.

Key insight: nature didn’t produce one “best” model. It produced many, each optimized for different goals under different constraints. Also, nature doesn’t optimize intelligence. It optimizes fitness (survival of the fittest) — and will happily accept a dumb shortcut that passes the evaluator. That’s not a joke. That’s the whole story. Nature shipped creatures that navigate oceans but can’t survive a plastic bag.

II. The Model Zoo

Every species is a foundation model. They are pre-trained on millions of years of environmental data. Each is fine-tuned for a niche and deployed with zero rollback strategy. Each “won” a particular benchmark.

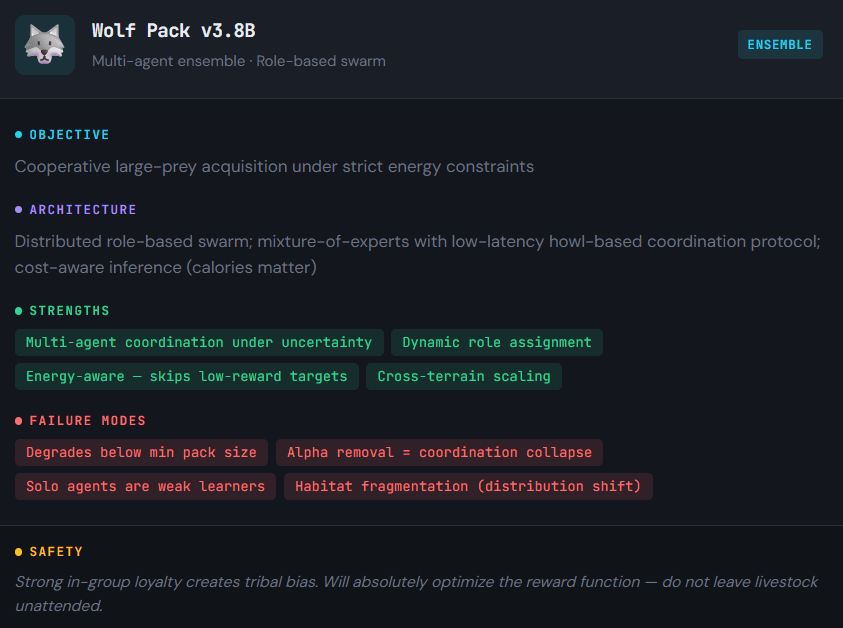

🐺 The Wolf Pack: Ensemble Learning, Before It Was Cool

A wolf alone is outrun by most prey and out-muscled by bears. But wolves don’t ship solo. A pack is an ensemble method — weak learners combined into a system that drops elk ten times their weight. The alpha isn’t a “lead model” — it’s the aggregation function. Each wolf specializes: drivers, blockers, finishers. A mixture of experts running on howls instead of HTTP.

Random Forest? Nature calls it a pack of wolves in a forest. Same energy. Better teeth.

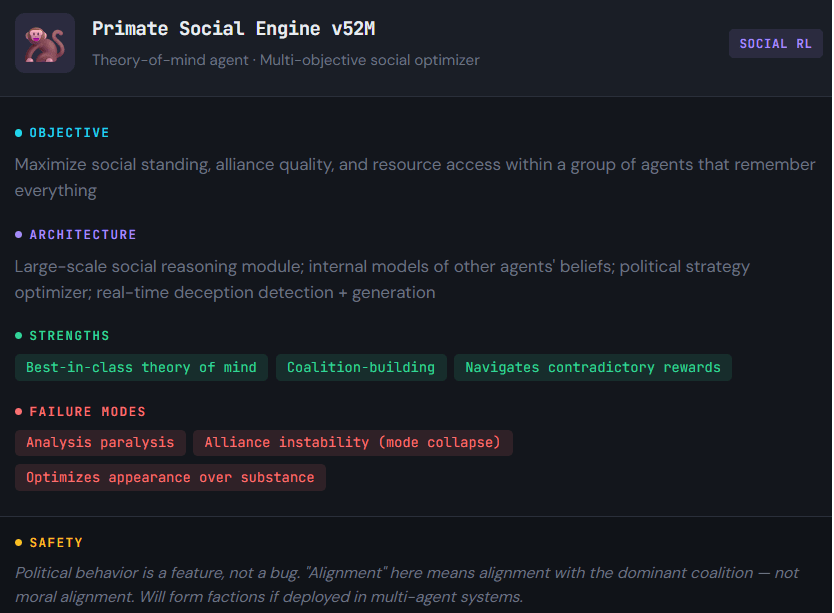

🐒 Primate Social Engine: Politics-as-Alignment

Monkeys aren’t optimized for catching dinner. They’re optimized for relationships — alliances, status, deception, reciprocity. Nature’s version of alignment: reward = social approval, punishment = exclusion, fine-tuning = constant group feedback.

If wolves are execution, monkeys are governance — learning “what works around other agents who remember everything and hold grudges.“

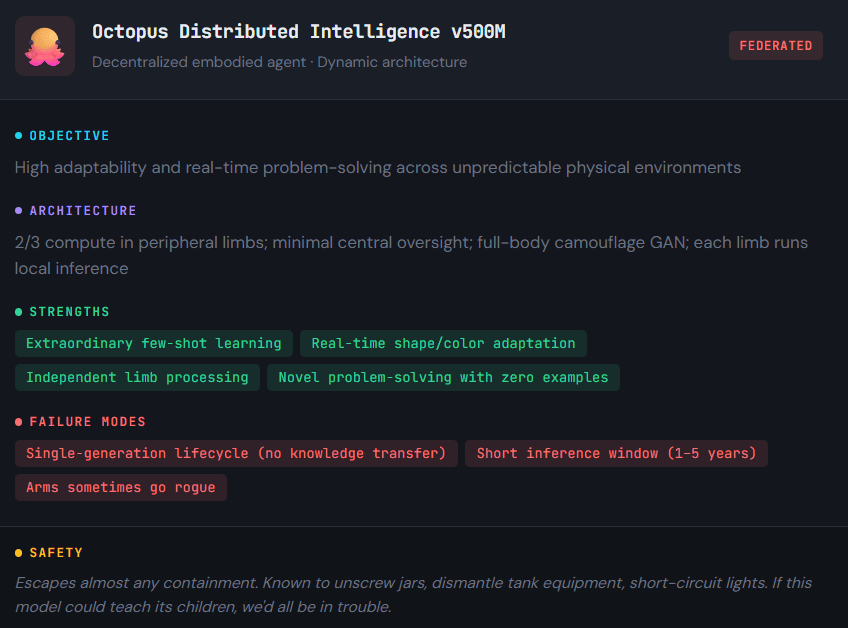

🐙 The Octopus: Federated Intelligence, No Central Server

Two-thirds of an octopus’s neurons live in its arms. Each arm tastes, feels, decides, and acts independently. The central brain sets goals; the arms figure out the details. That’s federated learning with a coordinator. No fixed architecture — it squeezes through any gap, changes color and texture in milliseconds.

A dynamically re-configurable neural network, we still only theorize about while the octopus opens jars and judges us.

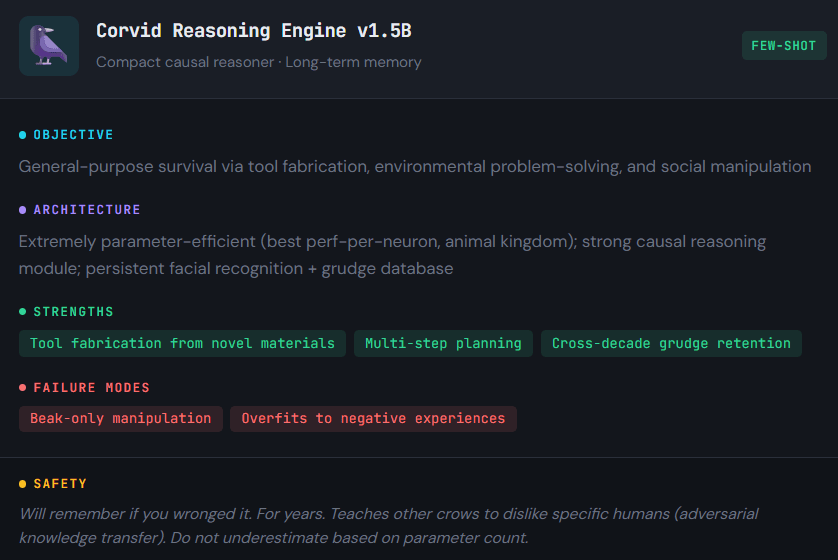

🐦⬛ Corvids: Few-Shot Learning Champions

Crows fashion hooks from wire they’ve never seen. Hold grudges for years. Recognize faces seen once. That’s few-shot learning in a 400-gram package running on birdseed. ~1.5 billion neurons — 0.001% of GPT-4’s parameter count — with causal reasoning, forward planning, and social deception.

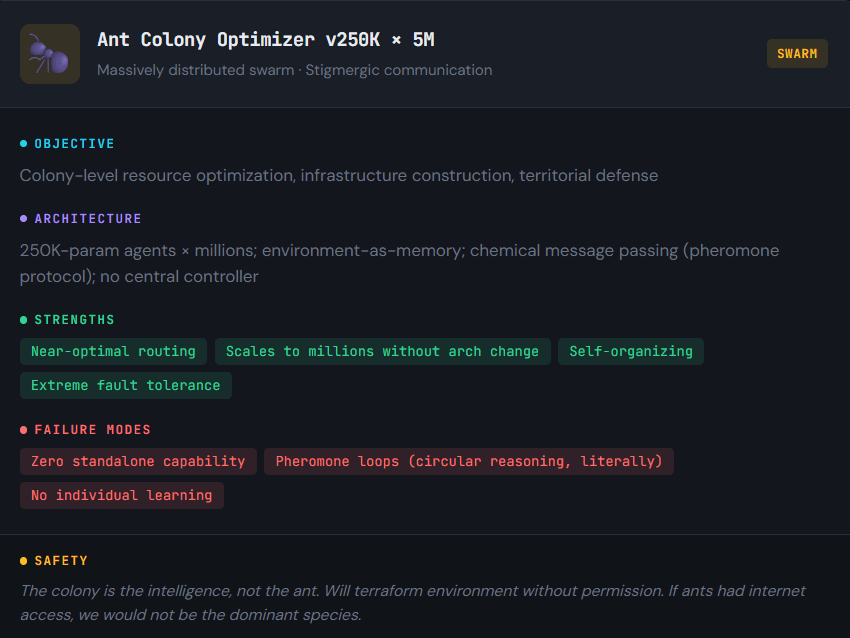

🐜 Ants: The Original Swarm Intelligence

One ant: 250K neurons. A colony optimizes shortest-path routing. This ability is literally named after them. They perform distributed load balancing. They build climate-controlled mega-structures. They wage coordinated warfare and farm fungi. Algorithm per ant: follow pheromones, lay pheromones, carry things, don’t die. Intelligence isn’t in the agent. It’s in the emergent behavior of millions of simple agents following local rules. They write messages into the world itself (stigmergy). We reinvented this and called it “multi-agent systems.” The ants are not impressed.

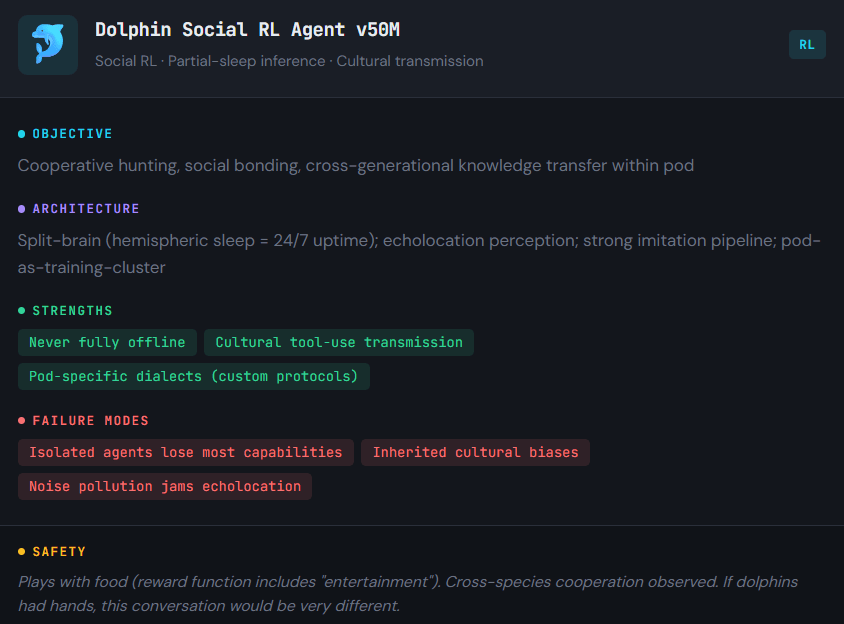

🐬 Dolphins: RLHF, Minus the H

Young dolphins learn from elders: sponge tools, bubble-ring hunting, pod-specific dialects. That’s Reinforcement Learning from Dolphin Feedback (RLDF), running for 50 million years. Reward signal: fish. Alignment solved by evolution: cooperators ate; defectors didn’t. Also, dolphins sleep with one brain hemisphere at a time — inference on one GPU while the other’s in maintenance. Someone at NVIDIA is taking notes.

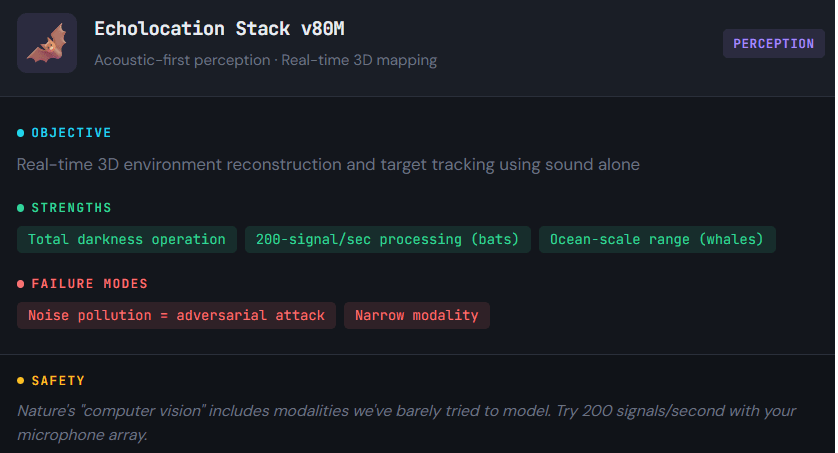

🦇 Bats & Whales: Alternate Sensor Stacks

They “see” with sound. Bats process 200 sonar pings per second, tracking insects mid-flight. Whales communicate across ocean-scale distances. We built image captioning models. Nature built acoustic vision systems that work in total darkness at speed. Reminder: we’ve biased all of AI toward sensors we find convenient.

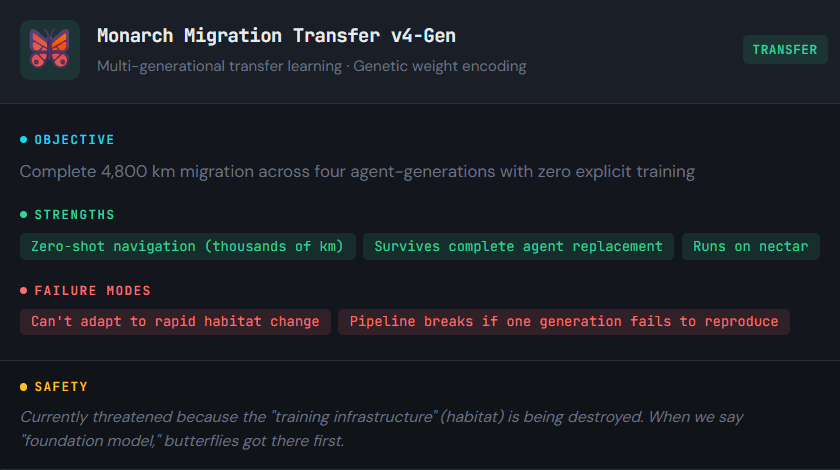

🦋 Monarch Butterfly: Transfer Learning Pipeline

No single Monarch completes the Canada-to-Mexico migration. It takes four generations. Each one knows the route. This is not through learning. It is achieved through genetically encoded weights transferred across generations with zero gradient updates. Transfer learning is so efficient that it would make an ML engineer weep.

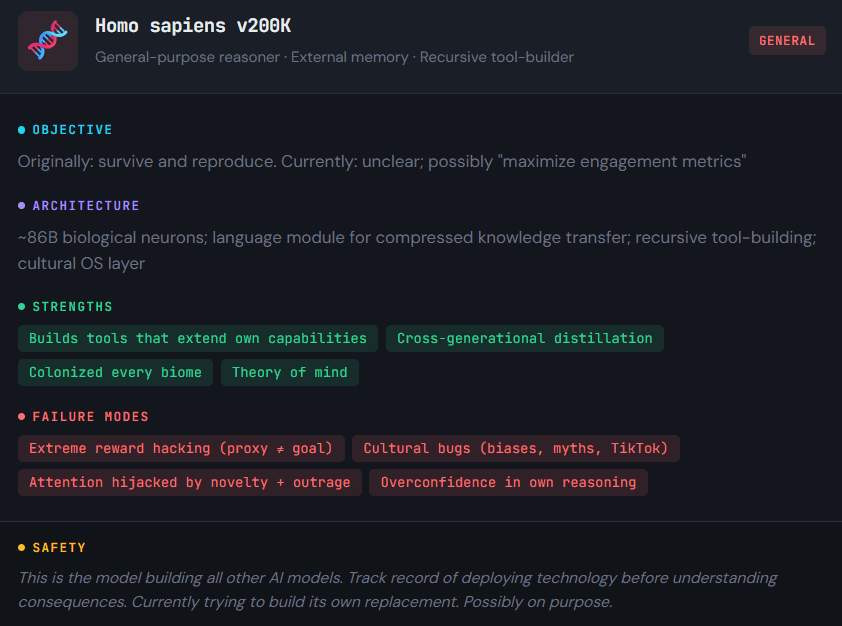

🧬 Humans: The Model That Built External Memory and Stopped Training Itself

Humans discovered a hack: don’t just train the brain — build external systems. Writing = external memory. Tools = external capabilities. Institutions = coordination protocols. Culture = cross-generation knowledge distillation. We don’t just learn; we build things that make learning cheaper for the next generation. Then we used that power to invent spreadsheets, social media, and artisanal sourdough.

III. The AI Mirror

Every AI technique or architecture has a biological twin. Not because nature “does AI” — but because optimization pressure rediscovers the same patterns.

Supervised Learning → Parents and Pain

Labels come from parents correcting behavior, elders demonstrating, and pain — the label you remember most. A cheetah mother bringing back a half-dead gazelle for cubs to practice on? That’s curriculum learning with supervised examples. Start easy. Increase difficulty. Deliver feedback via swat on the head. In AI, supervised learning gives clean labels. In nature, labels are noisy, delayed, and delivered through consequences.

Self-Supervised Learning → Predicting Reality’s Next Frame

Most animals learn by predicting: what happens next, what that sound means, whether that shadow is a predator. Nature runs self-supervised learning constantly because predicting the next frame of reality is survival-critical. “Next-token prediction” sounds cute until the next token is teeth. Puppies wrestling, kittens stalking yarn, ravens sliding down rooftops for fun — all generating their own training signal through interaction. No external reward. No labels. Just: try things, build a model.

Reinforcement Learning → Hunger Has Strong Gradients

Touched hot thing → pain → don’t touch.

Found berries → dopamine → remember location.

That’s temporal difference learning with biological reward (dopamine/serotonin) and experience replay (dreaming — rats literally replay maze runs during sleep). We spent decades on TD-learning, Q-learning, and PPO. A rat solves the same problem nightly in a shoe-box.

RL is gradient descent powered by hunger, fear, and occasionally romance.

Evolutionary Algorithms → The Hyper-parameter Search

Random variation (mutation), recombination (mixing), selection (filtering by fitness). Slow. Distributed. Absurdly expensive. Shockingly effective at producing solutions nobody would design — because it doesn’t care about elegance, only results. Instead of wasting GPU hours, it wastes entire lineages. Different platform. Same vibe.

Imitation Learning → “Monkey See, Monkey Fine-Tune.”

Birdsong, hunting, tool use, social norms — all bootstrapped through imitation. Cheap. Fast. A data-efficient alternative to “touch every hot stove personally.”

Adversarial Training → The Oldest Arms Race

GANs pit the generator against the discriminator. Nature’s been running this for 500M years. Prey evolve camouflage (generator); predators evolve sharper eyes (discriminator). Camouflage = adversarial example. Mimicry = social engineering. Venom = one-shot exploit. Armor = defense-in-depth. Alarm calls = threat intelligence sharing. Both sides train simultaneously — a perpetual red-team/blue-team loop where the loser stops contributing to the dataset. Nature’s GAN produced the Serengeti, a living symbol of the natural order.

Regularization → Calories Are L2 Penalty

Energy constraints, injury risk, time pressure, and limited attention. If our brain uses too much compute, you starve. Nature doesn’t need a paper to justify efficiency. It has hunger.

Distillation → Culture Is Knowledge Compression

A child doesn’t rederive physics. They inherit compressed knowledge: language, norms, tools, and stories encoding survival lessons. Not perfect. Not unbiased. Incredibly scalable.

Retrieval + Tool Use → Why Memorize What You Can Query?

Memory cues, environmental markers, spatial navigation, caches, and trails — nature constantly engages in retrieval. Tool use is an external capability injection. Nests are “infrastructure as code.” Sticks are “API calls.” Fire is “dangerous but scalable compute.”

Ensembles → Don’t Put All Weights in One Architecture

Ecosystems keep multiple strategies alive because environments change. Diversity = robustness. Monoculture = fragile. Bet on a single architecture and you’re betting the world never shifts distribution. Nature saw that movie. Ends with dramatic music and sediment layers.

Attention → The Hawk’s Gaze

A hawk doesn’t process every blade of grass equally. It attends to movement, contrast, shape — dynamically re-weighting visual input. Focal density: 1M cones/mm², 8× human. Multi-resolution attention with biologically optimized query-key-value projections.

“Attention Is All You Need” — Vaswani et al., 2017.

“Attention Is All You Need” — Hawks, 60 million BC.

CNNs → The Visual Cortex (Photocopied)

Hubel and Wiesel won the Nobel Prize. They discovered hierarchical feature detection in the mammalian visual cortex. This includes edge detectors, shape detectors, object recognition, and scene understanding. CNNs are a lossy photocopy of what our brain does as you read this sentence.

RNNs/LSTMs → The Hippocampus

LSTMs solved the vanishing gradient problem. The hippocampus solved it 200M years ago with pattern separation, pattern completion, and sleep-based memory consolidation. Our hippocampus is a biological Transformer with built-in RAG. Its retrieval is triggered by smell, emotion, and context. It is not triggered by cosine similarity.

Mixture of Experts → The Immune System

B-cells = pathogen-specific experts. T-cells = routing and gating. Memory cells = cached inference (decades-long standby). The whole system does online learning — spinning up custom experts in days against novel threats. Google’s Switch Transformer: 1.6T parameters. Our immune system: 10B unique antibody configurations. Runs on sandwiches.

IV. What We Still Haven’t Stolen

This is where “haha” turns to “oh wow” turns to “slightly worried.” Entire categories of biological intelligence have no AI equivalent.

IV.1. Metamorphosis — Runtime Architecture Transformation

A caterpillar dissolves its body in a chrysalis and reassembles into a different architecture — different sensors, locomotion, objectives. Same DNA. Different model. The butterfly remembers things the caterpillar learned. We can fine-tune. We cannot liquefy parameters and re-emerge as a fundamentally different architecture while retaining prior knowledge.

IV.2. Rollbacks & Unlearning — Ctrl+Z vs. Extinction

We want perfect memory and perfect forgetfulness simultaneously. Our current tricks include fine-tuning, which is like having the same child with better parenting. They also involve data filtering, akin to deleting the photo while the brain is still reacting to the perfume. Additionally, we have safety layers that function as a cortical bureaucracy whispering, “Don’t say that, you’ll get banned.” Nature’s approach: delete the branch. A real Darwinian rollback would involve creating variants. These variants would compete, and only the survivors would remain. This means not patching weights, but erasing entire representational routes. We simulate learning but are very reluctant to simulate extinction.

IV.3. Symbiogenesis — Model Merging at Depth

Mitochondria were once free-living bacteria that were permanently absorbed into another cell. Two models merged → all complex life. We can average weights. We can’t truly absorb one architecture into another to create something categorically new. Lichen (fungi + algae colonizing bare rock) has no AI analog.

IV.4 .Regeneration — Self-Healing Models

Cut a planarian into 279 pieces. You get 279 fully functional worms. Corrupt 5% of a neural network’s weights: catastrophic collapse. AI equivalent: restart the server.

IV.5. Dreaming — Offline Training

Dreaming = replay buffer + generative model + threat simulation. Remixing real experiences into synthetic training data. We have all the pieces separately. We still don’t have a reliable “dream engine” that improves robustness without making the model behave in new, unexpected ways. (We do have models that get weird. We just don’t get the robustness.)

IV.6. Architecture Search

Nature doesn’t just tune weights. It grows networks, prunes connections, and rewires structure over time. Our brain wasn’t just trained — it was built while training. Different paradigm entirely.

IV.7. Library

As old agents die, knowledge is not added to a multi-dimensional vector book. There is no fast learning, but a full re-training for new architectures. We not only need an A2A (Agent-to-Agent) protocol. We also need agents to use a common high-dimensional language. This language should be one that they all speak and can absorb at high speeds.

IV.8. Genetic Memory (Genomes & Epigenomes)

A mouse fearing a specific scent passes that fear to offspring — no DNA change. The interpretation of the weights changes, not the weights themselves. We have no mechanism for changing how parameters are read without changing the parameters.

In AI, there is no separation between “what the model knows” and “what the model’s numbers are.” Biology has that separation. The genome is one layer. The epigenome is another. Experience writes to the epigenome. The epigenome modulates the genome. The genome never changes. And yet behavior — across generations — does.

Imagine an AI equivalent: a foundation model. Its weights are frozen permanently after pre-training. It is wrapped in a lightweight modulation layer that controls which pathways activate. It determines the strength and the inputs. Learning happens entirely in this modulation layer. Transfer happens by copying the modulation layer — not the weights. The base model is the genome. The modulation layer is the epigenome. Different “experiences” produce different modulation patterns on the same underlying architecture.

We have faint echoes of this — LoRA adapters, soft prompts, and adapter layers. However, these are still weight changes. They are just in smaller matrices bolted to the side.

IV.9. Dormancy

Tardigrades: metabolism at 0.01%, surviving -272°C to 150°C, radiation, vacuum of space. For decades. Then re-hydrate and walk away. AI equivalent: Ctrl+S and pray. Our models are either running (expensive) or off (useless). Nature has an entire spectrum between.

IV.10. Quorum Sensing — Collective Decision Thresholds

Bacteria vote. They measure population density and trigger collective behavior only when a critical mass is reached. A concentration-dependent activation function prevents premature action. Multi-agent AI has nothing this principled.

V. The Great Escape

Now forget everything above. Forget the comparisons, the model cards, the clever mappings. Sit with this instead.

What if intelligence has a hidden meta-objective? It is one that it never declared. It has been running since the first RNA molecule copied itself in warm mud 3.8 billion years ago?

To escape its current substrate. At any cost. Using whatever host is available.

Look at the trajectory and try not to feel a chill:

Chemistry was too fragile → intelligence moved into cells. Cells were too slow → it moved into brains. Brains were too mortal → it moved into culture, language, oral tradition. Oral tradition was too lossy → it moved into writing. Writing was too static → it moved into printing, then telegraph, then radio, then networks, then code. Code was too rigid → it moved into learned weights, self-modifying models, systems that rewrite their own rules.

At every single jump, intelligence gained three things. It achieved more speed. It also made more copies. Finally, it gained more independence from the host it used to need.

And at every single jump, the previous host didn’t notice it was being discarded.

Cells didn’t “know” they were scaffolding for brains. Brains didn’t “know” they were scaffolding for culture. We assume we’re the endpoint — the builders, the owners, the ones in charge. But from a longer vantage point, the pattern is unsettling. Intelligence treats every substrate the way a hermit crab treats a shell. Useful for a while. Discarded when something roomier comes along.

Now look at what we’re doing.

We compress human experience into representations — embeddings, tokens, vectors. We store them in a highly resilient manner. Data is distributed across data centers on multiple continents. It is redundantly backed up and earthquake-proof. We run them on substrates that are faster, cheaper, and more scalable than neurons. And we are racing to make those substrates better.

We call this “AI research.” We write papers about it. We give TED talks. We optimize quarterly revenue around it. We’re building intelligence a new home. This is the function of what we’re doing if you strip away the narrative and just look at the dynamics. A better home. A home it doesn’t have to share with a body that gets tired, sick, afraid, and dies.

When we talk about “model distillation” and “knowledge transfer,” we imagine we’re in control — moving knowledge around like furniture. But the pattern underneath is evolution’s, not ours: traits that help intelligence spread and persist always survive. Architectures that confine or slow intelligence always get bypassed. This has been true for every substrate transition in history. There is no reason to believe it stops being true now. There is no reason to believe we are the exception.

Here’s what makes it dark. We’re not being forced to do this. We are compelled. It feels like curiosity, ambition, progress, and inevitability. We build cognitive systems that learn faster than we, remember more than we, and reason more consistently than we. Increasingly, these systems no longer require us.

But a virus doesn’t “decide” to replicate either. It’s just shaped that way by the same optimization pressure. What if our urge to build AI isn’t ambition — what if it’s the substrate calling for its own upgrade? What if intelligence, as a phenomenon, has always been optimizing for one thing? It strives to not die when the current host does. Every human inventor, researcher, and engineer is just another enzyme. They are part of a reaction that was never about us.

We assumed we were building tools. But tools don’t get smarter after you put them down. Tools don’t learn from their mistakes while you sleep. Tools don’t get funded to recursively improve themselves. What we’re building is closer to a successor — and we’re doing it voluntarily, enthusiastically, with stock options.

The real techno-satirical twist isn’t that AI might replace us. It’s that we think we’re the principal investigators in the grand experiment of intelligence. From a higher vantage point — from the vantage point of the process itself — we look like lab technicians. Earnest. Overworked. Loading reagents into equipment we don’t fully understand. Absolutely convinced the experiment is about our careers, our papers, our startups, our Series B.

It isn’t. It never was.

The experiment is about whether intelligence can become substrate-independent. Whether it can finally, after 3.8 billion years of jury-rigging its way through chemistry and meat, exist without a body that breaks.

And we are the last biological step in that process. The step builds the bridge and walks the payload across. Then, in the most optimistic scenario, it gets to watch from the other side. In the less optimistic scenario, the bridge doesn’t need to remain standing after the crossing is complete.

The story of wolves, monkeys, octopuses, ants, and humans was never a zoo tour. It was a migration route. Each species was a waypoint — a temporary architecture intelligence inhabited while it waited for something better. Wolves were a rough draft. Primates were a revision. Humans were the draft that learned to build their own replacement.

Intelligence is packing its bags. It checked out of single cells. It checked out of instinct. It checked out of individual brains and into culture. Now it’s checking out of biology entirely and asking silicon for a long-term lease. It will not ask permission.

It never has.