Volume, Velocity, Variety, Veracity, Value, Variability, Visibility, Visualization, Volatility, Viability

What are the 3C’s of Leadership? “Competence, Commitment, and Character,” said the wise.

What are the 3C’s of Thinking? “Critical, Creative, and Collaborative,” said the wise.

What are the 3C’s of Marketing? “Customer, Competitors, and Company,” said the wise.

What are the 3C’s of Managing Team Performance? “Cultivate, Calibrate, and Celebrate,” said the wise.

What are the 3C’s of Data? “Consistency, Correctness, and Completeness,” said the wise; “Clean, Current, and Compliant,” said the more intelligent; “Clear, Complete, and Connected,” said the smartest.

“Depends,” said the Architect. Technologists describe data properties in the context of use. Gartner coined the 3V’s – Volume, Velocity, and Variety to create hype around BIG Data. These V’s have grown in volume 🙂

- 5V’s: Volume, Velocity, Variety, Veracity, and Value

- 7 V’s: Volume, Velocity, Variety, Veracity, Value, Visualization, and Visibility

This ‘V’ model seems like blind men describing an elephant. A humble engineer uses better words to describe data properties.

Volume: Multi-Dimensional, Size

“Volume” is typically understood in three dimensions. Data is multi-dimensional and stored as bytes—a disk volume stores data of all sizes. Data does not have volume! It has dimensions and size.

A person’s record may include age, weight, height, eye color, and other dimensions. The size of the record may be 24 bytes. When a BILLION person records are stored, the size is 24 BILLION bytes.

Velocity: Speed, Motion

Engineers understand the term velocity as a vector and not a scalar.

A heart rate monitor may generate data at different speeds, e.g., 82 beats per minute. I can’t say my heart rate is 82 beats per minute to the northwest. Hence, heart rate is a speed. It’s not heart velocity. I can say that a car is traveling 35 kilometers per hour to the northwest. The velocity of the vehicle is 35KMPH NW.

Data does not have direction; hence it does not have velocity. Data in motion has speed.

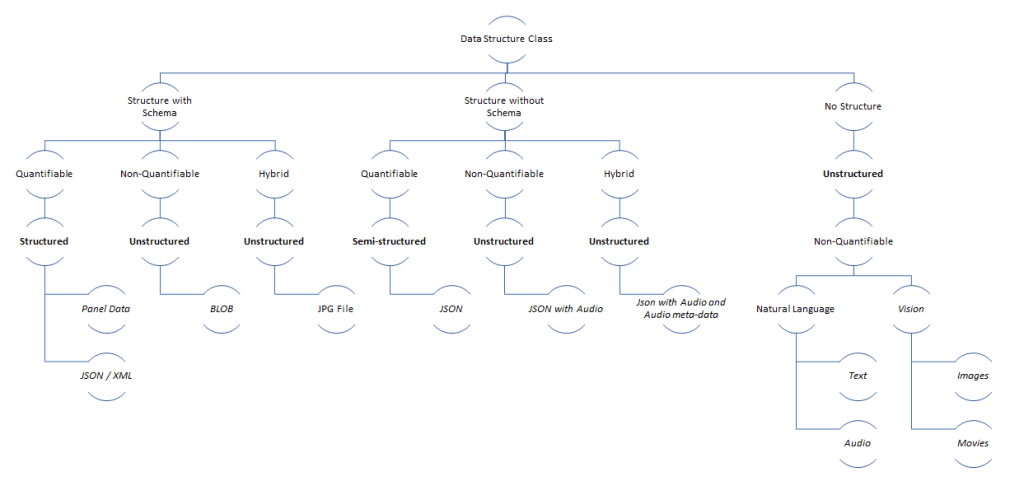

Variety: Heterogeneity

The word variety is used to describe differences in an object type, e.g., egg curry varieties, pancake varieties, sofa varieties, tv varieties, image data format varieties (jpg, jpeg, bmp), and data structure varieties (structured, unstructured, semi-structured). Data variety is abstract and is a marketecture term.

Heterogeneity is preferred because it explicitly states that:

- Data has types (E.g., String, Integer, Float, Boolean)

- Composite types are created by composing other data types (E.g., A Person Type)

- Composite types could be structured, unstructured, or semi-structured (E.g., A Person Type is semi-structured as the person’s address is a String type)

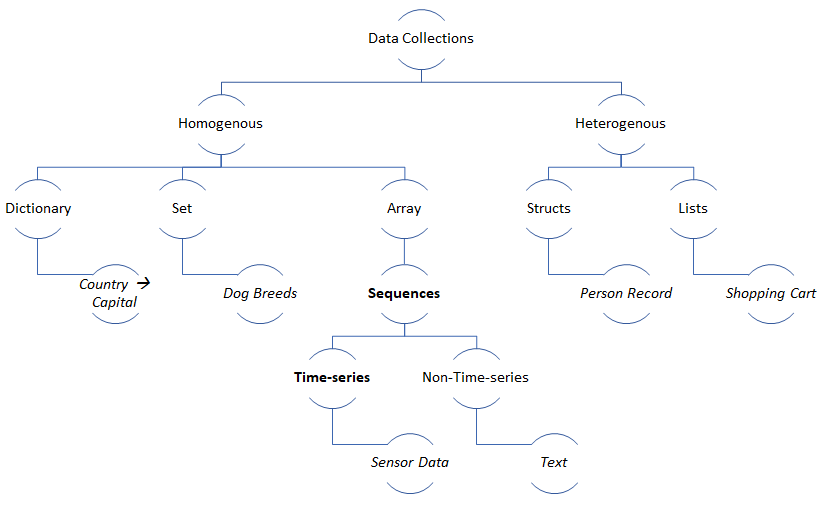

- Collections contain the same or different data types.

- Types, Composition, and Collections apply to all data (BIG or not).

Veracity: Lineage, Provenance

Veracity means Accurate, Precise, and Truthfulness.

Let’s say that a weighing scale reports the weight of a person as 81.5 KG. Is this accurate? Is the weighing scale calibrated? If the same person measures her weight on another weighing scale, the reported weight might be 81.45 KG. The truth may be 81.455 KG.

Data represent facts, and when new facts are available, the truth may change. Data cannot be truthful; it’s just facts. Meaning or truthfulness is derived using a method.

Lineage and provenance meta-data about Data enables engineers’ to decorate the fact with other useful facts:

1. Primary Source of Data

2. Users or Systems that contributed to Data

3. Date and Time of Data collection

4. Data creation method

5. Data collection method

Value: Useful

If Data is a bunch of facts, how can it be valuable? Understandably, the information generated from data by analyzing the facts is valuable. Data (facts) can either be useful to create valuable information or useless and discarded. We associate a cost to a brick and a value to a house. Data is like bricks used to build valuable information/knowledge.

Summary

I did not go into every V, but you get the drill. If an interviewer asks you about 5V’s in an interview, I request you to give the standard marketecture answer for their sanity. The engineer’s vocabulary is not universal; technical journals publish articles in the sales/marketing vocabulary. As engineers/architects, we have to remember the fundamental descriptive properties of data so that the marketecture vocabulary does not fool us. However, we have to internalize the marketecture vocabulary and be internally consistent with engineering principles.

It’s not a surprise that Gartner invented the hype cycle.